UWinnipeg researchers are developing technology that could revolutionize food production thanks to a Weston Seeding Food Innovation grant. The multi-disciplinary team includes: MSc student Maryam Bafandkar, Dr. Christopher Henry, Dr. Ed Cloutis, Dr. Chris Bidinosti, MSc student Reid Lowden, PhD student Chen-Yi Liu, Dr. Jonathan Ziprick (Red River College), MSc student Pu Junyao and Post-doctoral fellow Dr. Michael Beck.

The University of Winnipeg has launched a cutting-edge research project that could transform the way we produce food, allowing farmers in Canada and beyond to care for large prairie crops as efficiently as a backyard garden, thanks to a $250,000 Weston Seeding Food Innovation grant.

UWinnipeg physics professor, Dr. Christopher Bidinosti is leading the project along with applied computer science professor, Dr. Christopher Henry. Their research team includes experts from UWinnipeg, Red River College, the University of Saskatchewan, Northstar Robotics, Sightline Innovation, the Canola Council of Canada, and Manitoba Pulse & Soybean Growers.

“Most gardeners, because their gardens are small, can pick every weed by hand or snip off every leaf that has rust on it, to give it that intimate care,” said Bidinosti. “If we can do that on the scale of the farm or the Canadian prairies, imagine how much food you could grow?”

As camera sensors shrink in size and self-driving vehicles continue to improve, this idea of “gardening on a massive scale” is becoming possible.

“There’s been a revolutionary change in computing hardware that has opened the door for many cool, real-world applications of machine learning,” said Henry. “Digital agriculture is the next big industry to benefit immensely from this technology.”

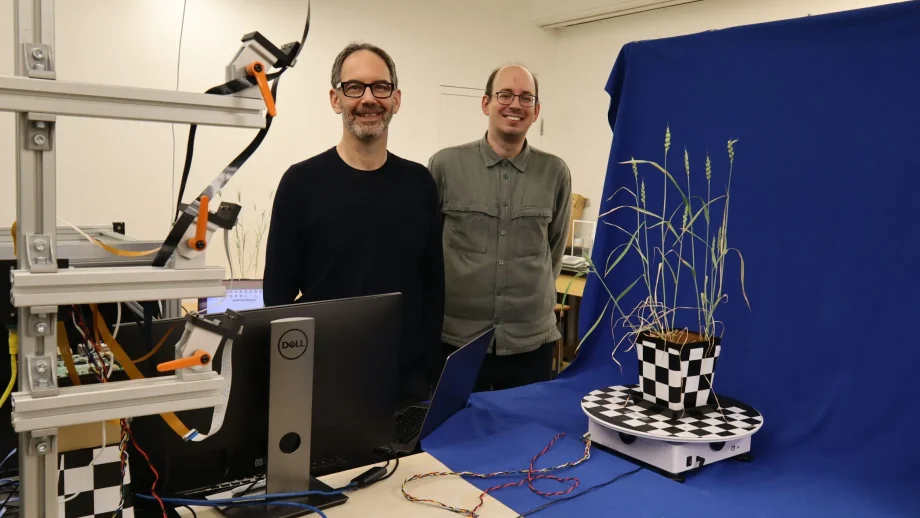

But in order to train a computer to recognize (and tend for) a prairie crop, it needs to access extensive examples of plants and weeds. This comes in the form of extremely large collections of pre-identified images of crop plants and weeds, from many different angles. Creating such a dataset by hand would take far too many people and an unrealistic amount of time.

“The main goal of our research project is to develop the means to automatically generate and label these images through a computer controlled camera system. We will then make the images publicly available for use by Canadian researchers and companies, because the fastest way to innovation is to get this data into the hands of more innovators,” said Bidinosti.

Before moving the technology outdoors, they will test their approach in a controlled environment at UWinnipeg and use the images they collect to develop software at the Dr. Ezzat A. Ibrahim GPU Education Lab. Post-doctoral fellow Dr. Michael Beck will be working on the camera system and software, along with three UWinnipeg MSc students who will gain hands-on experience analyzing and solving abstract and technical problems in the emergent field of digital agriculture.

“This is uncharted territory for me, as my research so far has focused on things you couldn’t touch, like code and formulas,” said Beck. “If someone would have told me a decade ago that I’d live in Winnipeg and work on a system to image plants, basically make a photoshoot with them, and classify them with neural networks, I would have had a hard time believing it.”

Researchers in the Departments of Biology, Chemistry, and Geography will also contribute to the project, especially Drs. Rafael Otfinowski and Ed Cloutis, who offer expertise with greenhouse-based experiments and optical imaging, respectively. Collaborators outside UWinnipeg bring further expertise in agriculture, botany, computer science, plant science, remote sensing, and robotics.

Former UWinnipeg postdoctoral fellow in physics, Dr. Jonathan Ziprick, will be leading the effort at Red River College.

“The next revolution in agriculture can be achieved with current technology, and through collaboration between our academic and agricultural communities, Manitoba is the place to do it,” said Ziprick.

With such a diverse, multi-disciplinary team and the burgeoning capacity of machine learning to crunch massive amounts of data, Bidinosti and Henry expect to create an unprecedented number of labelled images, planting the seed for new solutions in global food production.

“Machine learning is disrupting a lot of fields, but, in this case, it is only going to enhance the capabilities of farmers,” said Henry. “Machine learning will become just another instrument farmers add to their toolbox.”

Read more: